Turning Apple Vision Pro’s Digital Crown is a bit like tuning your reality.

Apple Watch owners will be familiar with the Digital Crown. Early iPhone owners can recall the satisfying physical click of pressing its singular button. Anyone who remembers an iPod should be able to recall the circular motion on its wheel. On Apple Vision Pro, it is a dial on top that jogs you along the spectrum of immersion so quickly it feels like magic.

Apple’s passthrough magic might help explain why the industry is making such big shifts before this $3,500 spatial computer even ships.

To recap:

Pico laid off the vast majority of people building a Quest also-ran while planning long-term to build something resembling Apple’s work.

Saudi Arabia-owned Magic Leap took $500+ million more from the “sovereign” investment arm of the state to continue developing its technologies for transparent optical AR.

Microsoft formally killed its PC VR platform.

Meta is firing on all cylinders with Quest 3, AI-powered Ray-Ban glasses, and the forthcoming rumored Quest 3 Lite to differentiate itself and stay ahead of what Apple is doing.

Samsung (with Google) and Sony have chosen Qualcomm’s XR2+ Gen 2 as the engine of their upcoming headsets, after Samsung reportedly went back to the drawing board after seeing Vision Pro announced.

Valve brought Steam Link to the Quest store while seemingly still working behind the scenes on its own new headset.

All this happened before a single customer has walked into an Apple Store and turned that dial to see how it changes reality around you.

I wrote after my initial demo that I believe many people who turn that dial are unlikely to realize the physical world they see is a reconstruction on an opaque screen. This comes as researchers at universities like Stanford are actively investigating the difference between what your eyes see unaided and what they see through these reconstructions.

On the eve of Apple Vision Pro pre-orders, I turned the dial from the view of real world passthrough to a fully synthetic environment. Each degree, the physical walls of the room in Manhattan’s Tribeca area steadily faded away and then the ground before my feet. Finally, Vision Pro left just my stomach and arms as the only remnants of physical reality. A couple degrees more, though, and my stomach popped out of existence too. A few degrees further and my arms faded away too. That was too much, though, so I turned it back to just my arms and hands floating in a completely immersive environment. The setting followed me into Keynote where I was struck by an incredibly well-lit fully immersive environment for practicing speaking in a virtual boardroom setting, my arms gesturing in front of me with thin white outlines a reminder of the physical white wall that is their actual background.

I suspect the above paragraph might make a couple long-time readers twitchy for their wallets. Before you go strain your marriage or debt load, though, here are some of the asterisks.

Apple representatives told me I needed to stay on path during my second demo. I tried launching Super Fruit Ninja from the menu to see what Apple’s arm-swinging equivalent of Beat Saber is all about, but it didn’t launch for me.

Apple’s Persona technology for virtual face-to-face spatial calls is launching as a beta and the company has yet to publicly demonstrate the scanning process for either Personas or Vision Pro’s most visible differentiator – the external Eyesight display.

Eyesight is presented via a lenticular display, which appeared to have some degree of depth. It is extremely interesting, shown in my demo by a standing employee wearing a Vision Pro displaying representations of his eyes. I broke some of the display’s illusion of depth by squatting to view it from a dramatically different vertical angle.

EyeSight and Personas may be some of Apple’s most interesting but least-ready-for-primetime technologies. I would not be surprised if their inclusion is also necessary to jumpstart developers and also for the development of a next-generation with more comfort, less cost, and higher quality versions of these same features. We’re going to need to get scanned and have extensive real-time communications with Personas before we’re able to judge just how far along Apple is here. A few researchers at Meta, and maybe Google or Amazon, are likely waiting for those exact same benchmarks.

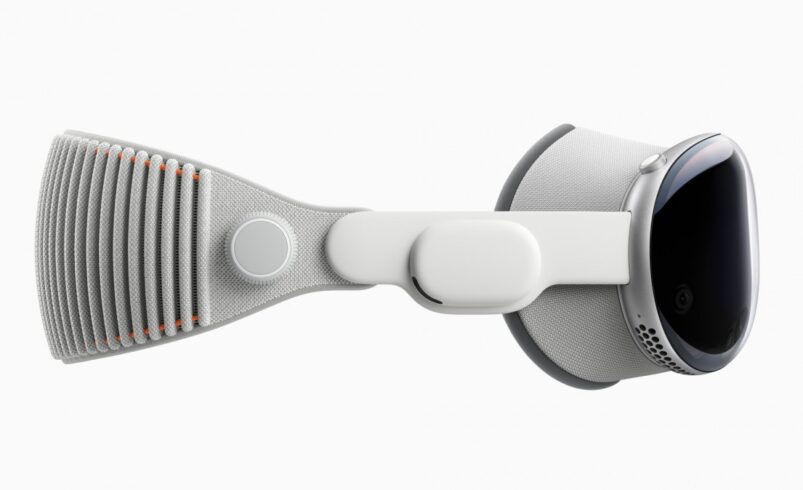

I used the Dual Loop Band exclusively in my second demo. Overall comfort felt improved compared to the first time, though there was a small amount of forgettable light leakage out the bottom of the headset the second time. In both demos, Apple offered no content to test with quick movements and the overall amount of time in headset wasn’t enough to say anything more substantial about long-term comfort with Apple’s two included straps. Apple confirmed the top strap from the Dual Loop Band is not compatible with the Solo Knit Band.

In my first demo, I was so impressed by Apple’s passthrough I wrote that it questions the future of transparent optics for AR. After my second demo, I believe this still, though I noticed more limitations to Apple’s passthrough this time around. Still, the quality of passthrough on Apple Vision Pro and ease with which it can be customized to my liking is an altogether better experience than Meta Quest 3.

Of course it is better, Apple Vision Pro’s reality distortion field starts at $3500 compared with Quest 3 at just $500. But where Meta anchored itself around standalone VR gaming, Apple built its entire strategy in VR & AR around a much higher quality core vision of your real environment you can subtract from or add to exactly to your liking – by simply turning that dial.

Spatial Videos, Photos & AirDrop

My favorite moment of the second carefully guided demo of Apple Vision Pro was zooming in on high resolution photos with a two-handed gesture and then quickly flicking over to the next ones. This felt exactly right. When I’m able to use Vision Pro with my own photo and video library, I hope to effortlessly flick through thousands of images and videos this way.

Apple’s aim is to convey greater emotional depth with spatial videos shot by Vision Pro or an iPhone 15 Pro. From what I saw of the provided example videos, they succeed. I wanted to AirDrop my own spatial video to the headset for viewing but Apple declined.

Pre-Orders & Reviews

UploadVR plans to pre-order Apple Vision Pro to have the full consumer experience of buying the headset, as well as for future coverage, development and independent technical analysis by my colleague David Heaney.

That is not a recommendation of any kind for our readers. On the eve of pre-orders for a computer that’s more expensive than some cars, it is disappointing we can’t say something more useful for our readers right now.

We do, however, highly recommend all US-based readers attempt to schedule a demo for Apple Vision Pro at your local Apple Store starting with its availability on February 2.

Souce: https://www.uploadvr.com/apple-vision-pro-second-impressions/